There's a strong case for the growth of AI in the medical sector, including digital humans. We outline some of the research worth knowing about.

“Hey Siri, could you take out my appendix please?” OK, virtual assistants are good, but they’re not that good. And yet, recent advances in artificial intelligence (AI) in medicine suggest that Siri doing a spot of surgery isn’t a totally far-fetched idea (if he or she had a body).

Robots are already common in operating theatres across the world. The da Vinci Surgical System was patented at the turn of the millennium and helps perform over a million procedures every year.

But the key word here is ‘helps’; surgeons control the multi-armed robot from a console. The da Vinci system isn’t intelligent or autonomous yet, although independently operating AI devices for complex surgeries are closer than you might think.

While robotic surgeons are where the mind naturally wanders to when talking about AI in medicine, let’s cast our minds outside of the operating theatre. AI has the potential to revolutionize the way healthcare is delivered, all the way from early diagnosis and treatment, through to aftercare and end-of-life services. And, looking at the statistics, it’s sorely needed.

According to Accenture, healthcare holds the top spot as the industry that’s currently using AI the most, whether it’s for personalized patient care or streamlining things in the back office.

Innovative AI-driven software can already reliably interpret breast cancer in mammograms 30 times faster than a human doctor and with 99% accuracy. A similar AI tool was found to be better at identifying skin cancers from photos of lesions than most experienced dermatologists.

AI can also help predict autism in infants, diagnose serious eye conditions, accurately spot people who are at risk of heart problems, and much more. In addition to direct patient care, AI and machine learning is being used to speed up medical research, bring drugs to market more quickly and improve training outcomes.

Conversational AI assistants in the healthcare sector can meet with patients, help them to manage their medication and post-surgery rehabilitation, or simply just provide some company.

Rudimentary assistants like Ellie from USC’s Institute for Creative Technologies have even been used to help identify signs of PTSD in veterans, using the fact that she’s not human to help people open up and get often vital care and support.

In short, there’s a laundry list of ways AI is already impacting healthcare outcomes. So, what’s next, and where do digital humans fit in? Let’s do some diagnostics of our own by examining a few troubling symptoms in the healthcare sector, as well as what innovative means of support are now becoming available.

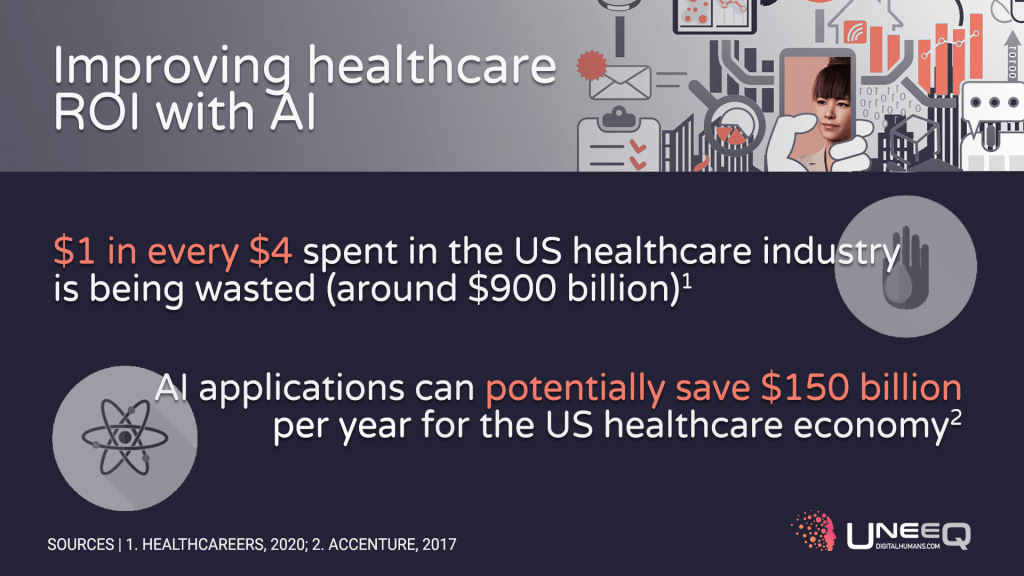

To get straight to brass tacks, Accenture’s analysis also predicts that by 2026, clinical health AI applications can potentially save $150 billion per year for the US healthcare economy. That’s a more-than-enticing prospect for an industry that typically (and unfortunately) sees 25% of its annual spending wasted.

And that’s just the start. Perhaps the biggest challenge facing global healthcare is a shortage of workers. The WHO estimates workforce inefficiencies cost health systems roughly US$500 billion every year.

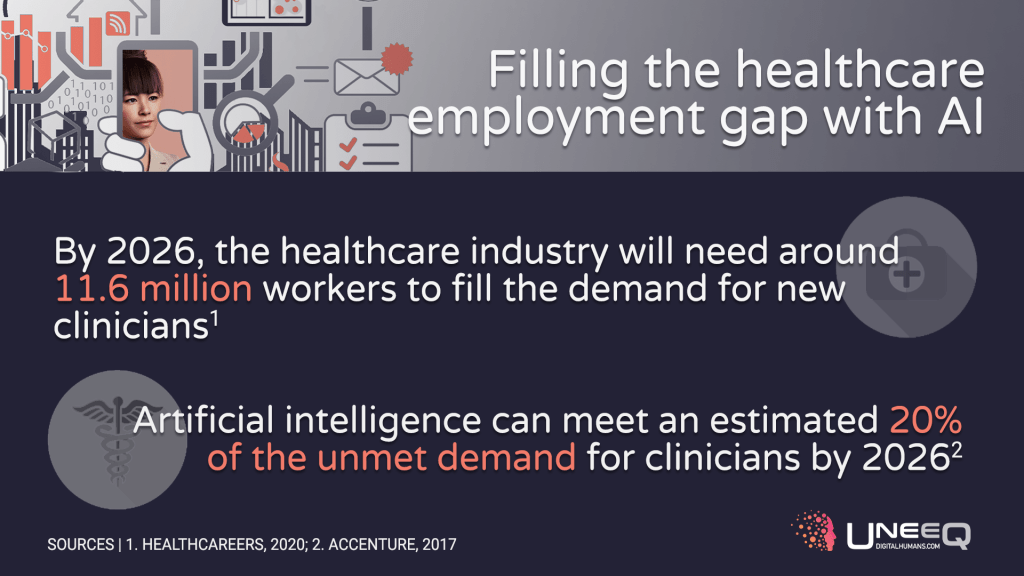

The healthcare industry is well aware of the problem. In the United States, healthcare job growth rate is increasing 7x faster than in the rest of the economy. However, in the 10 years leading up to 2026, we’ll need 11.6 million workers to fill new jobs, according to HealthCareers. A challenging task, and one we can’t afford to get wrong.

In a world where only half of countries have the necessary staff numbers to provide quality healthcare, AI is heavily touted as a way to bridge these gaps – automating mundane tasks and giving healthcare workers the opportunity to focus on the high-value jobs only they can do.

Just to reiterate that point, AI will never be able to replace the doctor – but it can ease the burden on doctors and the healthcare system at large.

Another benefit of AI in medicine is scalability. Robots can help with surgeries and other tasks, yes, but they’re often expensive, and so difficult to scale. Considering there’s likely to be an 18 million shortfall in healthcare professionals by 2030. That would require a lot of robots.

Logistics is also a problem. Ageing populations mean more services must be available outside hospitals and doctors’ offices, either at home or in aged care facilities. Many of the fastest-growing healthcare jobs in the US, for example, are within nursing, home care and personal care.

And let’s not even mention the “P word”. The pandemic of 2020 led to a dramatically increased demand for – and speed of adoption of – virtual, on-demand healthcare services.

All this leads us to a place where for technology to really make inroads, it needs to be scalable, available 24/7 and delivered digitally – not just for it to make commercial sense, but to deliver the best outcomes for patients.

It needs the ability to learn and operate independently (after a heavy period of supervised learning). And, if it is going to ease the burden on healthcare professionals and help the workforce grow, it needs to portray the emotional connection, care and the bedside manner we’ve come to expect from our invaluable healthcare practitioners.

AI can deliver in all these areas – but only when the IQ of artificial intelligence has the EQ to match.

The best healthcare professionals aren’t always the ones that attend the most prestigious schools or have an encyclopedic knowledge of medical conditions. They also need to have compassion, empathy, great communication skills and a reassuring presence.

These probably aren’t the first characteristics that spring to mind when you think of AI technologies.

But digital humans are specifically designed to build rapport and forge lasting emotional connections. Those were certainly among the top priorities we had in mind when we developed Sophie, a COVID-19 health advisor, in early 2020; or the Cardiac Coach concept you can see in the video above.

Giving AI a face and the ability to recognize tone of voice, facial expressions and body language (and respond accordingly) has profound implications for the healthcare industry.

Imagine a world where digital humans deliver vital aftercare services at scale, providing ongoing support and motivation for patients, some of whom have a grueling road to recovery.

As USC found with Ellie, digital humans may also play a part in diagnosing mental health issues by taking away people’s fear of judgement and allowing them to open up.

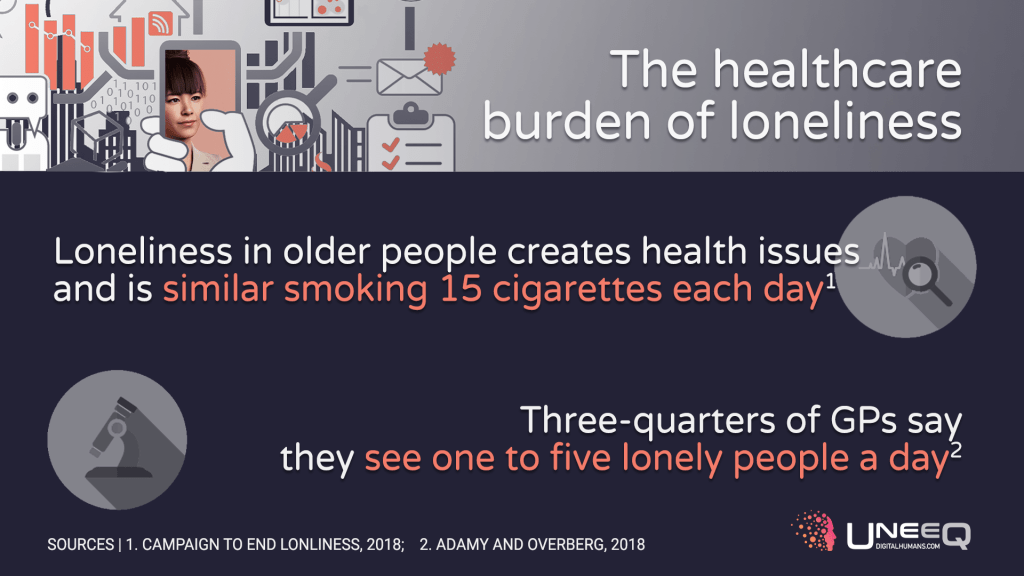

And in general, AI in this way can provide some companionship and engaging conversation to the incredible number of patients who visit their GP simply because they are lonely.

And – here comes the “P word” again – they can offer this kind of care at scale (to virtually millions of people at once) and virtually, using no-touch interfaces and in-home digital care. When needed, they hand off to real medical professionals, who have the time to dedicate to their higher-touch patients.

As 24/7 assistants, the abilities of AI in digital humans will surely be welcome relief for healthcare workers, 26% of whom say they’re suffering from burnout and 28% of whom are intending to leave their jobs within the next two years.

So, the potential is certainly there, but are patients ready for digital healthcare professionals?

Our tongue was firmly in cheek when we talked about Siri performing surgery. But it raises an important issue for the growing debate around the ethics of AI: trust. Are we willing to put our faith in machines to diagnose, treat and advise us? Apparently so.

More than half (51%) of people would let an intelligent robot perform minor surgery on them instead of a doctor, according to PwC. And 43% would be willing to have major, invasive operations – such as heart surgery – carried out by an autonomous machine.

When it comes to less risky routine tasks, such as checking a pulse, taking blood samples and offering personalized fitness advice, patients are very much on-board with AI-assisted healthcare.

There is even evidence to suggest that we sometimes trust machines more than humans – particularly, as mentioned, around making patients feel safer and more likely to honestly disclose health information without fear of judgment.

Let’s be clear, AI isn’t there to replace the relationship healthcare professionals have with their patients. But it can play an essential supporting role in an industry where staff shortages and inefficiencies risk lives. Looking at the challenges ahead, it simply has to.

AI is going to help reshape the healthcare industry over the next decade so it works better for patients, staff and the bottom line. And, it’s becoming clear: you don’t necessarily need a real human to offer a more human touch in medicine.